This is part 4 of my series on facial recognition. Part 1 was about face detection, part 2 was about facial landmarks and part 3 was about accuracy over time.

This post I will compare US congressmen to a public dataset of mugshots. This was inspired by the ACLU claim that Amazon’s face recognition falsely matched 28 members of Congress with mugshots.

The ACLU post didn’t include any source code or source of the mugshot datasets or the congressman dataset. It does note that the mugshot dataset consisted of 25,000 publicly available arrest photos. I found NIST special dataset specifically for this purpose. The dataset contains 1573 individuals (cases) 1495 male and 78 female. It has front as well as profiles of the individuals, although I only used the front facing images.

Below is a sample of a few front-facing mugshots.

The Congressmen headshots were taken from List of Congressmembers page in wikipedia. The formatting of the image names were inconsistent so I had to clean them up, and a few of the images were too large to send directly to Rekognition so I found alternative smaller images.

Below is a sample of a few congress people.

The way AWS Rekognition works is that you first create a collection and then upload images to that collection. The service then detects faces in that image and saves only the vector representation of the face. The service does not save your image. Unfortunately AWS does not allow you to access that vector representation since that would allow you to do your own comparison rather than paying for the service.

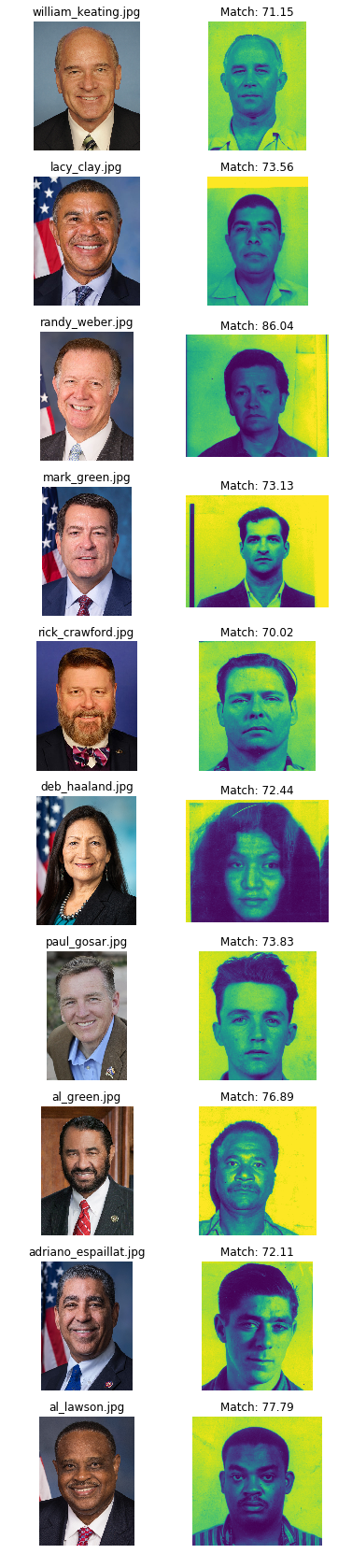

The default threshold to be considered a match is 70%. I don’t think this is appropriate in real world decision making applications, but it’s broad enough to likely include some matches.

So in total, we had 440 images of congressmen and 1,756 mugshots. How did we do?

10 mismatches.

Only one of the matches is greater than 80%.

I won’t comment about similarity but I will say that some look closer than others.

Final Thoughts

I have been thinking a lot about facial recognition and the potential it has to reshape society. Popular media characterizes the technology as both as an all too powerful technology that will lead to a surveillance state and a primative tool that will lead to many false convictions. I think there are both good and bad reasons to be suspicious of the technology.

Bad criticism: The technology itself is bias

From the ACLU article that performed a similar analysis:

Nearly 40 percent of Rekognition’s false matches in our test were of people of color, even though they make up only 20 percent of Congress.

My analysis yielded similar results with the mismatches disproportionately affecting congressmen of color. However, just from a cursory review of the mugshots, people of color were disproportionately represented in the mugshot database, so that’s not entirely fair to criticize the facial recognition technology for that. I believe a curated list of mugshots with certain characteristics would result in a similar representation of mismatches. Nothing I have read about the technology suggests that there are inherit bias.

Better criticism: A systematic method of identifying people of interest will reinforce our status quo inequalities

The process in which facial recognition technology is implemented can be abused. In fact, it’s likely to be abused by default. For instance, the simplest implementation of comparing faces with that of convicted criminals will obviously be skewed to the demographic that’s most represented in the training dataset.

Bad criticism: The technology is inaccurate

There’s already evidence that facial recognition technology has surpassed humans. Enough progress has been made and it doesn’t seem like we hit a wall. So you can’t invalidate the technology based solely on ability unless you throw out much less accurate eye witness accounts.

Better criticism: Due to the large dataset available to match against, spurious matches an be abused

In the above analyses, I compared 440 images with that of 1,756 mugshots. I performed a total of 772,640 comparisons (440 * 1,756). There were only 10 instances, with relatively low match probabilities. Just stating the match probabilities without the larger context (772k matches performed), a person could gain a false confidence that the results are stronger than they are.

Playing tricks using statistics is not new. OJ’s defense lawyer argued that despite OJ being an abusive husband, it is statistically unlikely that he killed his wife.

Only one in a thousand abusive husbands eventually murder their wives

But this ignores the fact that OJ’s wife was murdered. The more pertinent question is what percentage of murdered women were murdered by their abusive ex-husband?

Similar discretion needs to be applied when using facial recognition. The level of confidence needs to be adjusted for the number of null hypotheses made. My test had 1,756 null hypotheses for each congressman, so we shouldn’t make much of an 80% match.

Overall, facial recognition isn’t going anywhere and it does what humans can do, only better. There are potential issues with biases based on the dataset its trained on, but those can be addressed much better than biases inherit in humans. There needs to be some consensus about how to use this technology in a legal setting to prevent these abuses. But all these changes need to happen in institutions and in a legal framework. Attacking the technology is the wrong approach.