This is part 3 of a series of posts about facial recognition. In part 1 I discussed facial detection and part 2 I discussed facial landmarks. In this post, I’ll go into how accurate some off the shelf facial recognition are and how well they work as a subject ages.

I found this video of a man (boy?) that took a picture of himself every day over 10 years starting at age 12. Here are the first few frames and their landmarks.

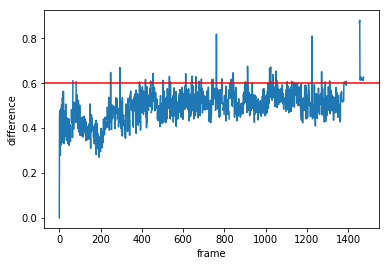

I wanted to check how his face vectors changed over time and how our 0.6 threshold would do over so many years. Note that detection is done using only the first and earliest picture of the person to identify every face from that point on. I used the dlib facial recognition package through face_recognition python wrapper. I used a distance of 0.6 which purportedly has an accuracy rate of 99.38%.

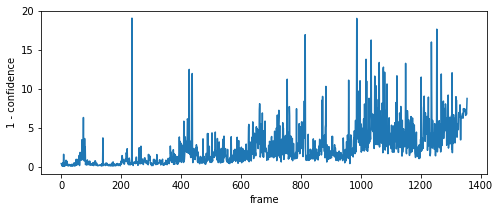

Below is a graph of all the images and the arbitrary 0.6 cutoff noted as a red line.

Overall it’s pretty good with 5% mismatch and 10% no face found, although not the 99.38% accuracy purported. But much of that is due to an imperfect dataset. The difference does grow over time as we would expect. Lets take a look at some of the errors

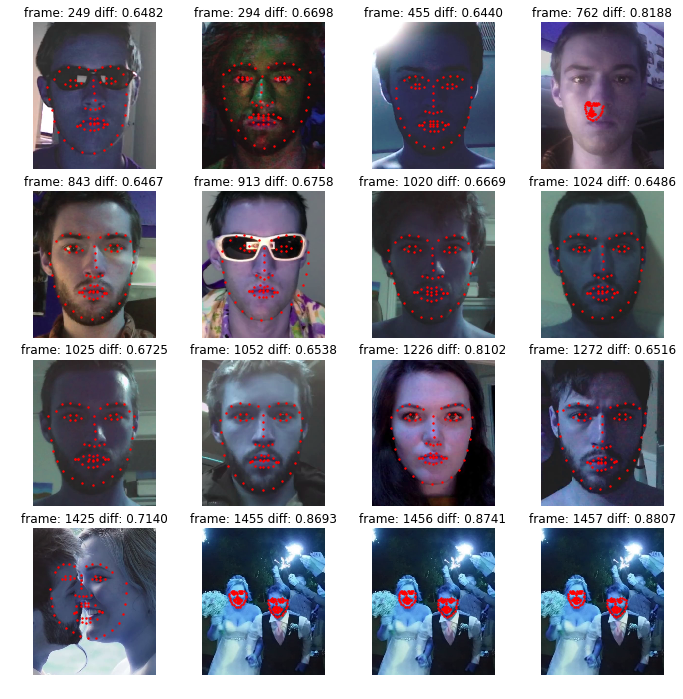

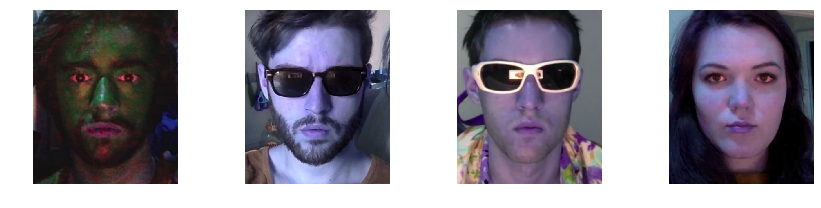

Out of the worst 16

- 2 are wearing sunglasses

- 1 is the subject in green-face

- 1 is a nose goblin

- 1 is his wife (fiancé?)

- 4 are multiple people

Datasets used in machine learning tend to be very curated and clean. Tests on real world images usually result in much lower accuracy. The LRW face recognition benchmark is no different.

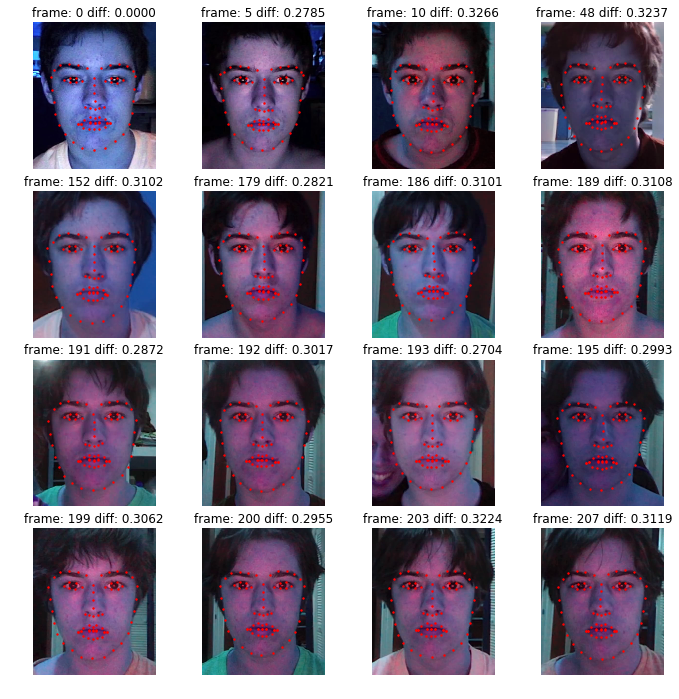

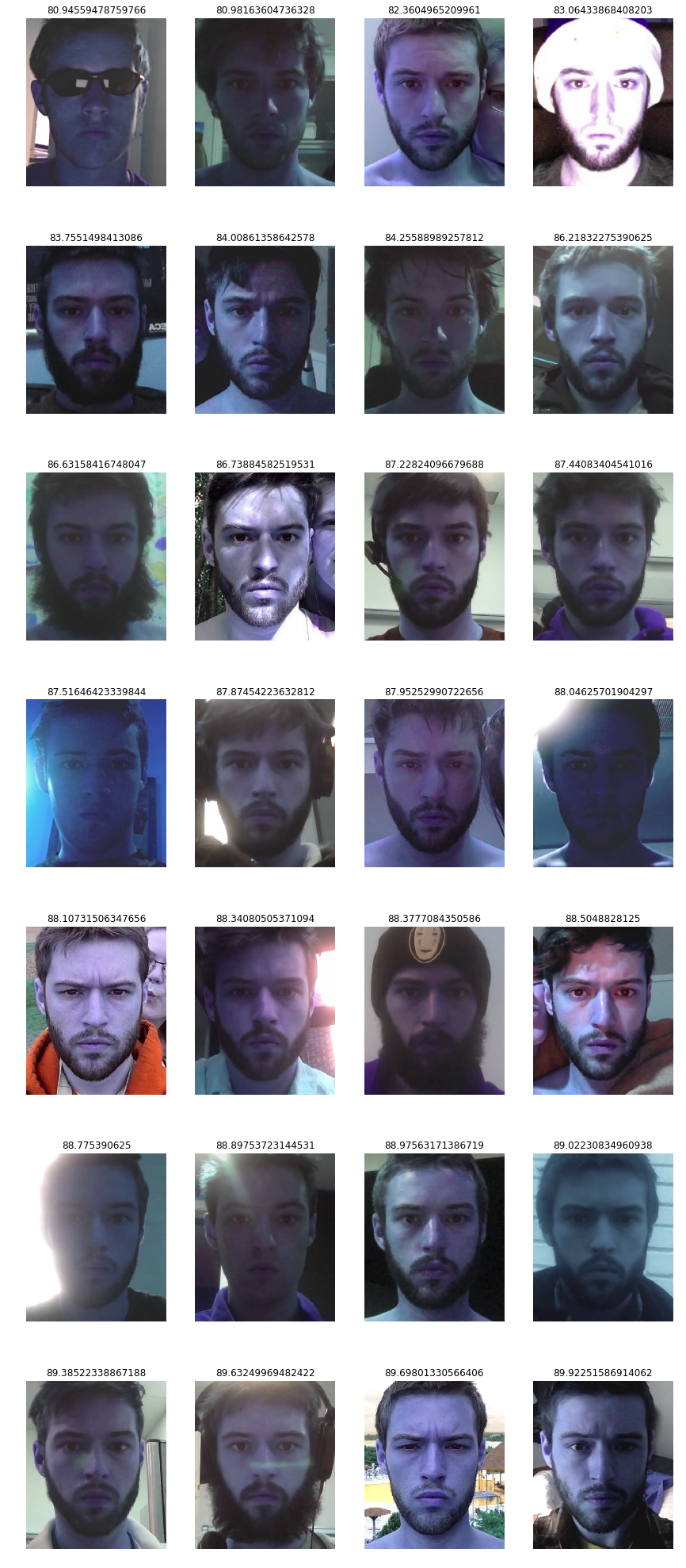

Let’s take a look at the best matches (lowest distances).

The first one is the single image we used to detect all subsequent images which explains why the difference is 0. The other matches have a reddish hue which may play a part in the strong match. And the lowest differences are (understandably) while the subject was young.

Finally, let’s take a look at the 100 or so images that found no face.

There are a little harder to analyze. There are certainly some lighting issues in a few of them, but overall these are clearly faces. It may have something to do with the redness of the subjects eyes and the contrast of the red eyes with the overall red hue of the image.

AWS Rekognition

I ran the same test using AWS Rekognition service. The service lets you upload two images and see if it comes up with a match to a certain level of confidence. I set the confidence to 70% and all but 4 correctly matched.

The only 4 that didn’t match with a confidence of 70% or more can be seen below:

And the images which did mach but with a low confidence can be seen below:

Again, glasses and lighting appear to be the main things that throw off the recognition algorithm.

Overall the AWS service is much stronger that the dlib model which is understandable. Again it’s important to note that the similarity is based off a single image of the subject at twelve years old.

In the next part I’ll look at the other side of the coin, spurious face matches. I’ll try to compare recreate the ACLU claim that AWS Rekognition falsely identified 28 congressmen as convicted felons.