In a prior post, Offline Object Detection and Tracking on a Raspberry Pi, I wrote an implementation of an offline object detection model. Since that time, aws has begun to offer some great services in that space, so I felt it was time to write about building an online object detection model with aws.

Use-Case

My use-case is the same as the original article: take a picture periodically, process it, and display some information as to the location of given objects over time.

Benefits of an Online Model

The most obvious benefit is speed. Speed isn’t always important. For my use-case, it’s not. But there is some benefit in leveraging a better model thats independent of my application. If we abstract out the image detection, we can plug in different models. The aws rekognition model is more powerful and even provides a hierarchy of the object (e.g. transportation -> vehicle -> car).

The other benefit is reduced complexity. All our hardware will do is take a picture and send it somewhere. If we keep everything local, troubleshooting and active development becomes more difficult.

And finally aws offers services precisely tailored to the task at hand. Tying in other aws services is also simple. Considering the aws free tier of 1k object detections on rekognition, 1mm requests on lambda and 5gb on s3, the added benefits may be worth it.

AWS Services

The easiest way to use aws services in python is through boto3. To use boto3, you have to first setup aws credentials.

S3

Any data consumed by aws has to pass through an S3 bucket. Fortunately, aws makes it easy to upload to S3 in python.

import boto3

s3_client=boto3.client('s3')

s3_client.upload_file("hello-local.jpeg", "spypy", "hello.jpeg")

To download

s3_client.download_file("spypy", "hello.jpeg", "hello-local.jpeg")

To delete

s3_client.delete_object(Bucket=s3_bucket, Key="hello.jpeg")

We can take an image, upload it to s3, process it with Rekognition, delete the image and return the object information.

Rekognition

Rekognition is another service accessible through boto3.

import boto3

rekognition_client=boto3.client('rekognition')

s3_bucket = "spypy"

image_name = "hello.jpeg"

image_s3 = {

'S3Object': {

'Bucket': s3_bucket, 'Name': image_name

}

}

response = rekognition_client.detect_labels(

Image=image_s3,

MaxLabels=10

)

The service has a number of features including object detection, facial recognition/analysis and text transcription. An online model would allow us to easily extend the functionality of our application by leveraging the Rekognition model.

The only downside of Rekognition is that it’s quite expensive. The free tier is 1,000 images a month, which sounds good but that only comes out to about ~33 images processed a month. After that, it’s $1 for the next 1,000 images. In another post, I’ll show how we can use our own model and bypass Rekognition entirely. It’ll be slower, more complex and may cost us more in compute time, but considering your use-case it may be worth it.

Lamda

Many of aws services are glued together using lambda. Lambda is a server-less platform that charges only for compute time. It’s perfect for apis that are seldom run. Even those that are run often, eliminating the need and complexity of maintaining a dedicated server is greatly beneficial. And best of all, it scales up automatically. There is some overhead in spinning up the instance, but for my use-case, speed isn’t important.

The lambda handler is the entry point into the function. Lambda passes along event and context parameters. The event value contains the information passed in explicitly into the function.

Below is a simple lambda function:

def lambda_handler(event, context):

return {

"isBase64Encoded": False,

'statusCode': 200,

'headers': {},

'body': "event: {} context: {}".format(event, context)

}

Once setup, you can test the function and pass in an event dictionary and see the response.

Test event:

{

"key1": "value1",

"key2": "value2",

"key3": "value3"

}

Output:

{

"isBase64Encoded": false,

"statusCode": 200,

"headers": {},

"body": "event: {'key1': 'value1', 'key2': 'value2', 'key3': 'value3'} context: <__main__.LambdaContext object at 0x7f15f5ce7ef0>"

}

Lambda can be then hooked up to API Gateway. This will expose the Lambda function to a public api.

If you want to pass in parameters via the api url, you have to turn on Use Lambda Proxy integration on the API Gateway Method Execution setup screen.

When we hit our API, we’ll get more information passed into the context parameter:

https://???/dev/empty-python-example

event: {

'resource': '/empty-python-example',

'path': '/empty-python-example',

'httpMethod': 'GET',

'headers': {...},

'multiValueHeaders': {...},

'queryStringParameters': None,

'multiValueQueryStringParameters': None,

'queryStringParameters': None,

'multiValueQueryStringParameters': None,

'pathParameters': None,

'stageVariables': None,

'requestContext': {...},

'body': None,

'isBase64Encoded': False

}

context: <__main__.LambdaContext object at 0x7f0ee1e08ef0>

From the event, you can parse the arguments:

https://???/dev/empty-python-example?key1=param1

event: {

...

'queryStringParameters': {'key1': 'param1'},

'multiValueQueryStringParameters': {'key1': ['param1']},

...

}

The final API will let you pass in a url as a string parameter and return the objects:

https://???/default/spypy-rekognition?urls=https://cdn1.autoexpress.co.uk/sites/autoexpressuk/files/2/22/dsc112-733_0.jpg

[

{

"Labels": [

{

"Name": "Transportation",

"Confidence": 99.79230499267578,

"Instances": [],

"Parents": []

},

{

"Name": "Vehicle",

"Confidence": 99.79230499267578,

"Instances": [],

"Parents": [

{

"Name": "Transportation"

}

]

},

{

"Name": "Car",

"Confidence": 99.79230499267578,

"Instances": [

{

"BoundingBox": {

"Width": 0.3765823245048523,

"Height": 0.3229130208492279,

"Left": 0.5312161445617676,

"Top": 0.5803122520446777

},

"Confidence": 99.79230499267578

}

],

"Parents": [

{

"Name": "Vehicle"

},

{

"Name": "Transportation"

}

]

},

{

"Name": "Road",

"Confidence": 99.0093994140625,

"Instances": [],

"Parents": []

},

{

"Name": "Tarmac",

"Confidence": 97.38719940185547,

"Instances": [],

"Parents": []

},

{

"Name": "Highway",

"Confidence": 92.62093353271484,

"Instances": [],

"Parents": [

{

"Name": "Road"

},

{

"Name": "Freeway"

}

]

},

{

"Name": "Freeway",

"Confidence": 92.62093353271484,

"Instances": [],

"Parents": [

{

"Name": "Road"

}

]

},

{

"Name": "Spoke",

"Confidence": 80.9249038696289,

"Instances": [],

"Parents": []

},

{

"Name": "Tire",

"Confidence": 80.8728256225586,

"Instances": [],

"Parents": []

},

{

"Name": "Wheel",

"Confidence": 76.86323547363281,

"Instances": [],

"Parents": []

}

],

"LabelModelVersion": "2.0",

"ResponseMetadata": {...}

}

}

]

Implementation

The handler function is the entry point to our call. All it does is parse the url parameter and pass it to detect_images_from_urls. The detect_images_from_url downloads the files to an s3 bucket and runs object detection on the files. The easiest way to get aws to perform on images is passing them through an s3 bucket..

Finally there is a helper file that downloads images

Next Steps

There’s a lot to explore with aws lambda, and I may write on each individual component:

Dependencies

To include dependencies in you aws lambda functions, you have to ship the libraries as well. Additionally, you can break out the dependencies into a separate aws layer so you can share the same dependencies across multiple functions.

The dependencies can cause some trouble because they can be large. For example, if you want to include tensorflow, that’s about 300 MB. Since there’s a limit of 250 MB unzipped for each lambda function, that means you can’t ship a lambda function that uses tensorflow. There’s a way around this however. The idea is to package up run the functionality of your project while monitoring the files referenced in the libraries. Then delete the files that were never referenced. This can reduce the size of your libraries greatly. For instance I got a tensorflow package down to ~125 MB. Another hack is to use the /tmp/ directory to store information. That can hold up to 500 MB.

I wrote a few useful shell scripts to automate this process and get the project ready to be uploaded into aws. I’ll write a post about that soon.

Outputting images

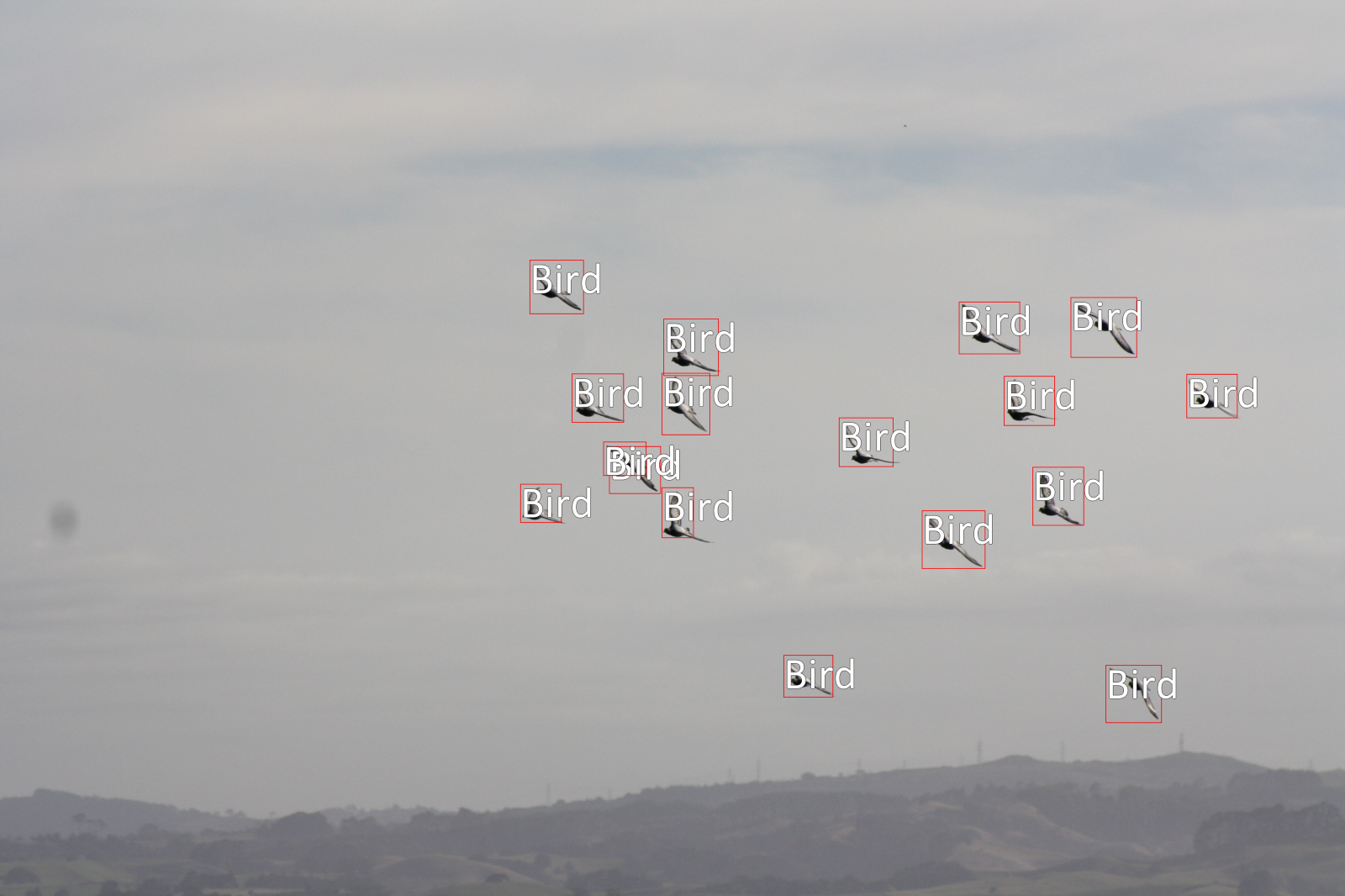

Our functionality can be easily extended to return an image with each object detected:

It’s a little tricky to get aws API Gateway to return images, but it’s possible.