My latest obsession has been writing bots for Reddit. Reddit is an online forum that hosts a range of topics, everything from news and…

My latest obsession has been writing bots for Reddit. Reddit is an online forum that hosts a range of topics, everything from news and politics to strange, possibly nsfw captions to WikiHow images. But mostly, it’s just memes.

What’s nice about Reddit is the sheer volume of content. Apparently 6% of internet users in the US used the social network, so its a treasure trove of user generated content. But the best part is the Reddit API, specifically PRAW, the python API wrapper. PRAW does all the rate limiting for you and lets you drink from the proverbial fire hose by streaming every post or comment posted to the site live. That’s around 11mm monthly posts 2.8mm daily comments. And you can scrape them all with:

for c in r.subreddit("all").stream.comments(pause_after=-1):

do_something(c)

Well, not exactly. I do notice comments fly under the radar and don’t trigger my bots correctly, which I think is due to the internal workings of PRAW rate limiting or latency on my end.

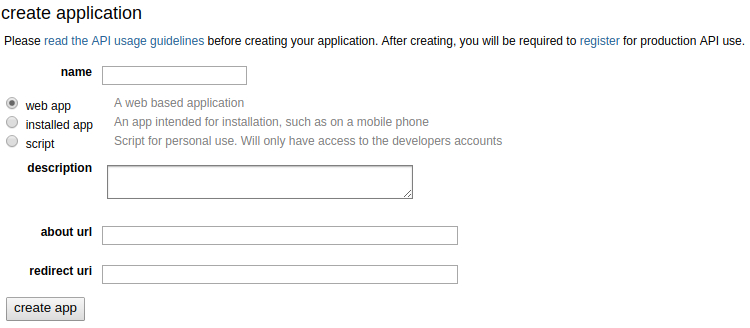

And adding app authorization is as simple as Settings -> App Authorization -> Create Application.

Don’t worry, only name and redirect uri are actually required and redirect uri can be localhost

Create as many apps as you want. Create as many account as you want with one email.

Ease of access and a good API play no small role in Reddit’s success.

API doesn’t just benefit people like me, but also the hundreds of moderators making sure everyone stays in line. Reddit de-centralized the moderation process, although controversies still exist.

Drinking From the Fire Hose

Despite the ease of the API, some boiler plate is required. As with most things involving web-scraping, it mostly includes wrapping everything up in a try-except and logging. When you’re drinking from the fire hose and you start to choke, you just have to step back for a second and keep going.

I wrote a simple bot platform that I’ll eventually write a post on. It allows me to write a bot. Here is an example of a bot that replies “Nice” to comments that have at least 2 consecutive replies of “Nice” (don’t ask, it’s a Reddit thing).

from bot import Bot

import re

from utils.utils import get_commented_posts

from praw.models.reddit.comment import Comment

NAME = "RepliesNice"

already_commented = get_commented_posts("logs/{}.log".format(NAME))

NICE = "Nice"

ACCEPTABLE_NICE = {"Nice", "Nice."}

def action(p, c, test):

global already_commented

already_commented = already_commented.union(set([p.id]))

if not test:

r = c.reply(NICE)

r.disable_inbox_replies()

return NICE

def get_comment_body(comment):

if type(comment) is Comment:

return comment.body

def get_bot():

bot = Bot(NAME)

bot.is_valid_post = lambda p : not p.archived and p.id not in already_commented

bot.is_valid_comment = lambda c: c.body in ACCEPTABLE_NICE and get_comment_body(c.parent()) in ACCEPTABLE_NICE

bot.action = action

return bot

if __name__ == "__main__":

bot = get_bot()

bot.monitor_comments_live("all")

Here’s the bot in action reaping some of that sweet, sweet Reddit karma

Other bots include

- A bot that is triggered by “Alexa play [song]” and returns a YouTube link

- A bot that’s triggered by “[Good|Bad] bot” and tracks votes and accompanying website

- A bot that colorizes images

- A bot that enhances images (currently offline)

- A bot that downloads comments for nefarious nlp purposes

Colorizer Bot

My favorite bot is the colorizer bot, which trolls subreddits pics, images, oldschoolschool, and historyporn — recently banned on historyporn :-(

The bot is based on the Colorful Image Colorization repo. The bot downloads every image posted on these subreddits, checks if they’re black and white and if so spins up a docker image to process the image and uploads it to imgur. The bot can also be triggered from outside subreddits by the keyword “colorize”.

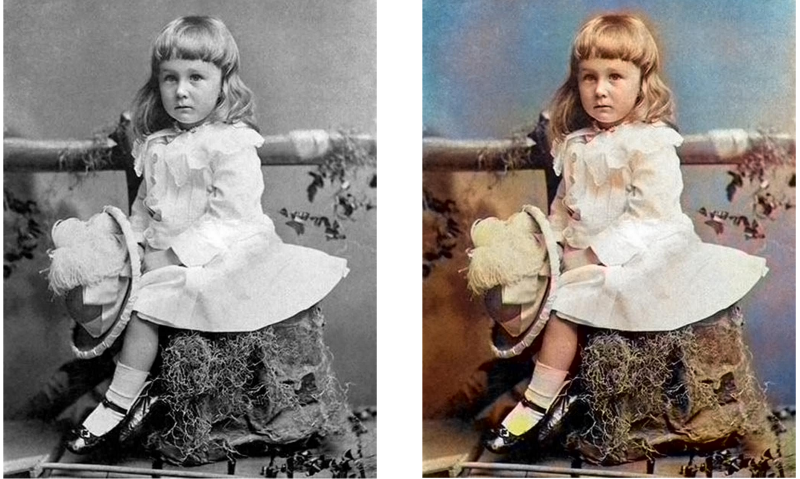

Here’s an example of FDR as a boy:

FDR as a boy. A very pretty boy

The results are hit-or-miss. One problem is that many of the images processed are black and white because they’re old, and the neural network was trained on modern images.

Practically every modern image that just had its color removed came out great.

The solution to this would be to tweak the model on more colorized historical images, and of course there’s a subreddit for that. If only there were an easy way to write a bot to gather and process all those submissions…

Your model is only as good as its training data.

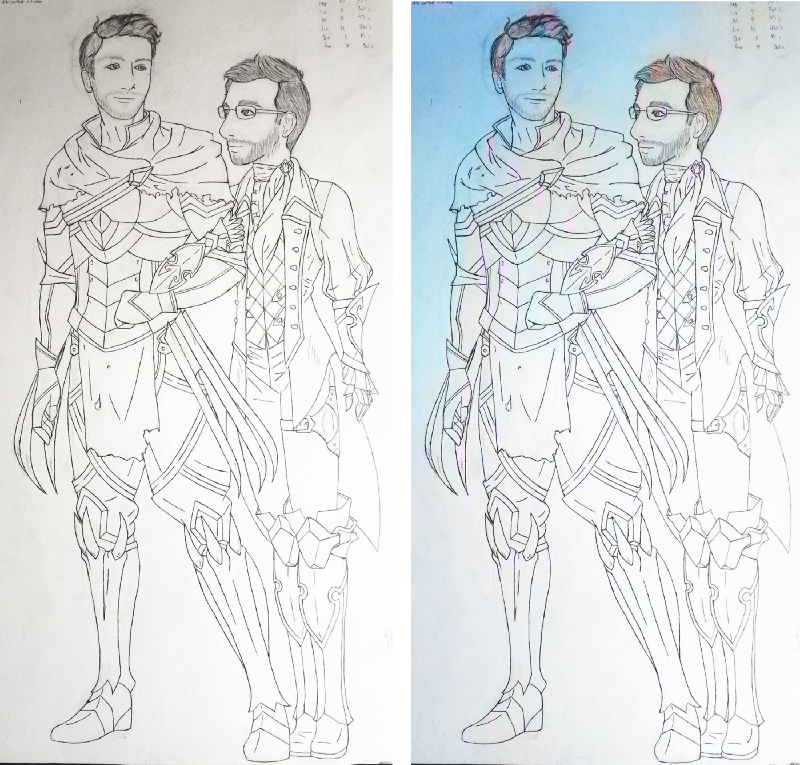

The other problem is that the criteria of back and white is not fool-proof.

To fix that perhaps I could compare the similarity of the original and finalized image and ensure that something more than a light shading of a persons hair was made. But I actually like these happy little accidents.

More Bots

I’ll continue working on new and novel bots and post my bot management system when I clean it up. The biggest obstacle is developing useful bots and rather than novelty bots. If you look at the most popular bots, you’ll see a lot of them are very niche. The other problem is triggering the bots. Many of the most popular bots have keywords that people purposely trigger, which proves their utility. But the trigger can only become known with popularity. So it’s a bit of chicken and the egg problem. You can harp on to a common expression, as I did with “Alexa, play [song]” or just troll an entire subreddit. But post too much and you’ll get banned.

Here are some ideas for future bots I may or may not work on:

- Bullshit Bot — Checks comments for declarative statements (e.g. I was born in [location]) and cross references them with other statements from that users history, and if they conflict, calls bullshit

- Enhance! Bot — Triggered by Enhance! Enhances image resolution. The problem with this one is that it takes more resources that my server can’t support just yet. I’m considering creating an aws lambda instance to process all my image processing bots

- Turing Bot — Just a chat bot that replies selectively based on similar threads that it has processed. It can be trained to have a particular sentiment or be tailor made for a particular group of subreddits

- Joke Explain Bot — A bot that explains jokes. There was a “bot” that did this although it was almost certainly someone manually explaining jokes trying to pass off as a bot.

Example:

I always park in handicapped spaces at the hospital, just to test their patients

Explanation:

Patients sounds like patience.

Or another:

A nun at a Catholic School was asking her 10 year old students what they wanted to be when they grew up.

“Susie, what do you want to be when you grow up?”

Susie said “I want to be a doctor.”

“Very nice,” the nun said. “Jenny what do you want to be when you grow up?”

Jenny said “I want to be a teacher.”

“Excellent answer,” the nun replied. “Martha what are you going to be when you grow up.”

Martha replies “I want to be a prostitute.”

Hearing that the nun faints.

The little girls all rush forward to the nun laying on the ground and try to help her. Shortly the nun regains consciousness And says in a weak voice “Martha what did you just say you wanted to be when you grew up?”

Martha says “I said I wanted to be a prostitute.”

“Oh thank goodness,” the nun said “for a moment I thought you said you wanted to be a Protestant.”

Explanation

**Catholics** are stereotypically perceived to dislike **Protestants**.

This can _probably_ be done with a neural network, but it is likely not a trivial problem and may have to be limited in scope. Either way, if it can be done, Reddit would be the perfect place to train and launch.

By Branko Blagojevic on September 7, 2018